Look around: what is happening? Australia, AI, Ghosn, Google, Suleimani, Starlink, Trump, TikTok. The world is an eruptive flux of frequently toxic emergent behavior, and every unexpected event is laced with subtle interconnected nuances. Stephen Hawking predicted this would be “the century of complexity.” He was talking about theoretical physics, but he was dead right about technology, societies, and geopolitics too.

Let’s try to define terms. How can we measure complexity? Seth Lloyd of MIT, in a paper which drily begins “The world has grown more complex recently, and the number of ways of measuring complexity has grown even faster,” proposed three key categories: difficulty of description, difficulty of creation, and degree of organization. Using those three criteria, it seems apparent at a glance that both our societies and our technologies are far more complex than they ever have been, and rapidly growing even moreso.

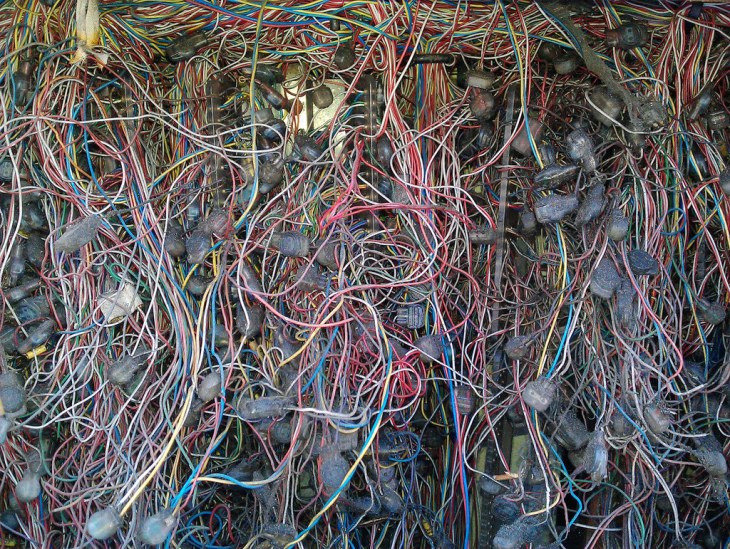

The thing is, complexity is the enemy. Ask any engineer … especially a security engineer. Ask the ghost of Steve Jobs. Adding complexity to solve a problem may bring a short-term benefit, but it invariably comes with an ever-accumulating long-term cost. Any human mind can only encompass so much complexity before it gives up and starts making slashing oversimplifications with an accompanying risk of terrible mistakes.

You may have noted that those human minds empowered to make major decisions are often those least suited to grappling with nuanced complexity. This itself is arguably a lingering effect of growing complexity. Even the simple concept of democracy has grown highly complex — party registration, primaries, fundraising, misinformation, gerrymandering, voter rolls, hanging chads, voting machines — and mapping a single vote for a representative to dozens if not hundreds of complex issues is impossible, even if you’re willing to consider all those issues in depth, which most people aren’t.

Complexity theory is a rich field, but it’s unclear how it can help with ordinary people trying to make sense of their world. In practice, people deal with complexity by coming up with simplified models close enough to the complex reality to be workable. These models can be dangerous — “everyone just needs to learn to code,” “software does the same thing every time it is run,” “democracies are benevolent” — but they were useful enough to make fitful progress.

In software, we at least recognize this as a problem. We pay lip service to the glories of erasing code, of simplifying functions, of eliminating side effects and state, of deprecating complex APIs, of attempting to scythe back the growing thickets of complexity. We call complexity “technical debt” and realize that at least in principle it needs to be paid down someday.

“Globalization should be conceptualized as a series of adapting and co-evolving global systems, each characterized by unpredictability, irreversibility and co-evolution. Such systems lack finalized ‘equilibrium’ or ‘order’; and the many pools of order heighten overall disorder,” to quote the late John Urry. Interestingly, software could be viewed that way as well, interpreting, say, “the Internet” and “browsers” and “operating systems” and “machine learning” as global software systems.

Software is also something of a best possible case for making complex things simpler. It is rapidly distributed worldwide. It is relatively devoid of emotional or political axegrinding. (I know, I know. I said “relatively.”) There are reasonably objective measures of performance and simplicity. And we’re all at least theoretically incentivized to simplify it.

So if we can make software simpler — both its tools and dependencies, and its actual end products — then that suggests we have at least some hope of keeping the world simple enough such that crude mental models will continue to be vaguely useful. Conversely, if we can’t, then it seems likely that our reality will just keep growing more complex and unpredictable, and we will increasingly live in a world of whole flocks of black swans. I’m not sure whether to be optimistic or not. My mental model, it seems, is failing me.

[“source=techcrunch”]